SparseNeuS: Fast Generalizable Neural Surface

Reconstruction from Sparse Views

Abstract

We introduce SparseNeuS, a novel neural rendering based method for the task of surface reconstruction from multi-view images. This task becomes more difficult when only sparse images are provided as input, a scenario where existing neural reconstruction approaches usually produce incomplete or distorted results. Moreover, their inability of generalizing to unseen new scenes impedes their application in practice. Contrarily, SparseNeuS can generalize to new scenes and work well with sparse images (as few as 2 or 3). SparseNeuS adopts signed distance function (SDF) as the surface representation, and learns generalizable priors from image features by introducing geometry encoding volumes for generic surface prediction. Moreover, several strategies are introduced to effectively leverage sparse views for high-quality reconstruction, including 1) a multi-level geometry reasoning framework to recover the surfaces in a coarse-to-fine manner; 2) a multi-scale color blending scheme for more reliable color prediction; 3) a consistency-aware fine-tuning scheme to control the inconsistent regions caused by occlusion and noise. Extensive experiments demonstrate that our approach not only outperforms the state-of-the-art methods, but also exhibits good efficiency, generalizability, and flexibility.

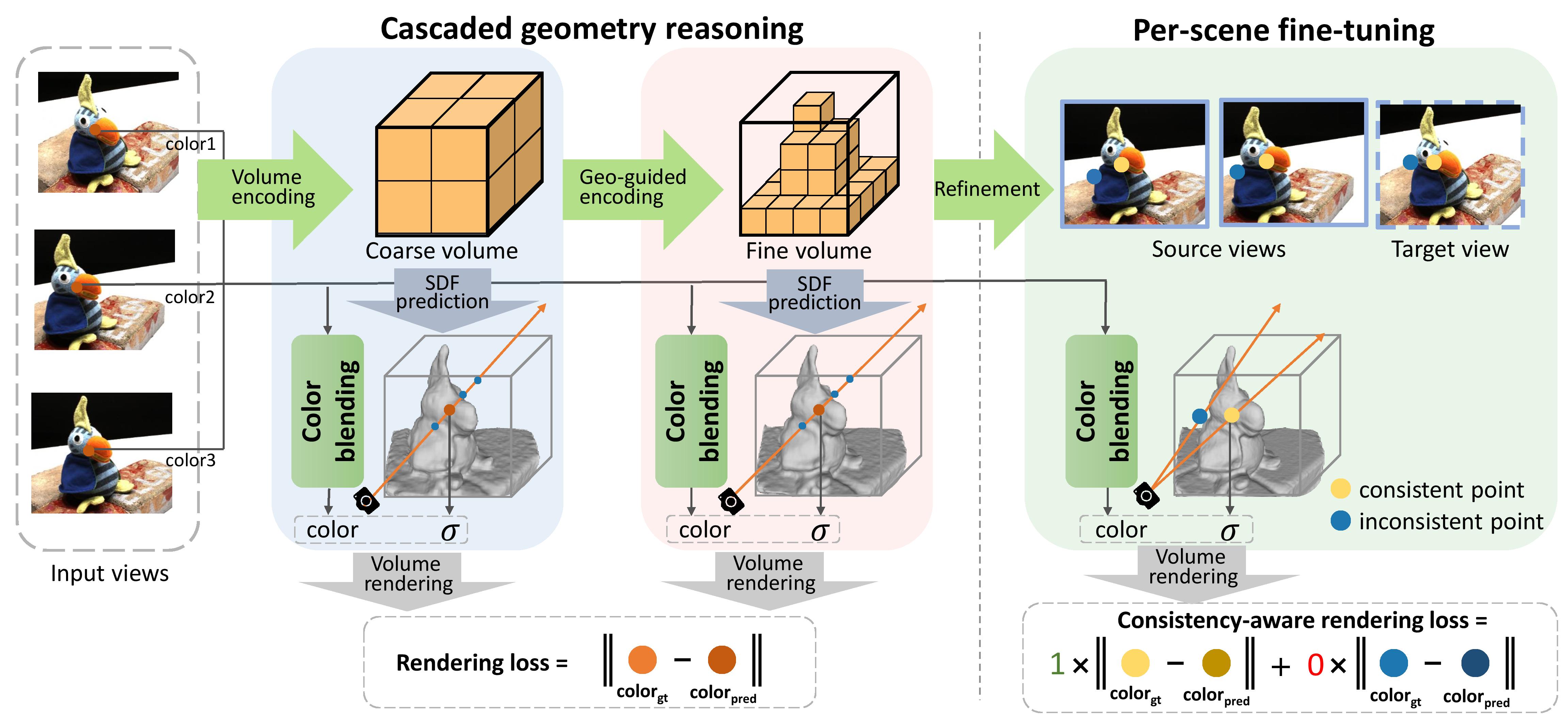

Pipeline

The overview of SparseNeuS. The cascaded geometry reasoning scheme first constructs a coarse volume that encodes relatively global features to obtain the fundamental geometry, and then constructs a fine volume guided by the coarse level to refine the geometry. Finally, a consistency-aware fine-tuning strategy is used to add subtle geometry details, thus yielding high-quality reconstructions with fine-grained surfaces. Specially, a multi-scale color blending module is leveraged for more reliable color prediction.

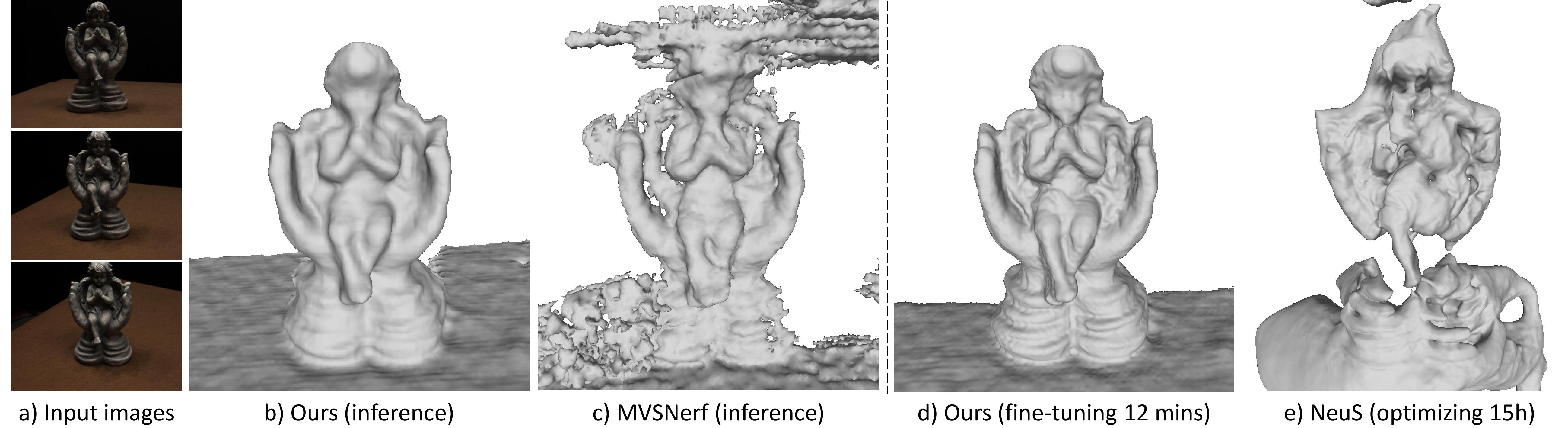

Qualitative comparision on DTU

With the strong generalizability learned from DTU dataset, our model can generalize to new scenes and generate reasonable geometries by a network forward inference. By the proposed consistency-aware fine-tuning scheme, the reconstructed results are largely improved compared those of inference and get rid of noisy and distorted background geometries.

Qualitative comparision on BlendedMVS

Although our model is only trained on DTU, our model still generalizes well to unseen scenes of BlendedMVS dataset.

Novel view synthesis after per-scene fine-tuning

Citation

@inproceedings{long2022sparseneus,

title={Sparseneus: Fast generalizable neural surface reconstruction from sparse views},

author={Long, Xiaoxiao and Lin, Cheng and Wang, Peng and Komura, Taku and Wang, Wenping},

booktitle={European Conference on Computer Vision},

pages={210--227},

year={2022},

organization={Springer}

}

This page is Zotero translator friendly. Page last updated